Denoising AutoEncoder

* autoencoder를 훈련시킬 때 일부러 원본에 noise를 추가하는 방식을 사용하면 더욱 효율적인 결과를 얻을 수 있게 된다. 더 자세히 설명하자면, autoencoder의 encoder부분에서 원본 데이터를 입력 받은 후 noise(Gaussian noise같은 random한 noise)를 추가하거나, dropout을 사용해 원본 데이터의 일부를 의도적으로 훼손하는 방법을 사용해 특징 vector를 구성하고, 이 특징 vector로부터 decoder가 원본 데이터를 복원하는 훈련을 하는 방법을 사용하는 것이다.

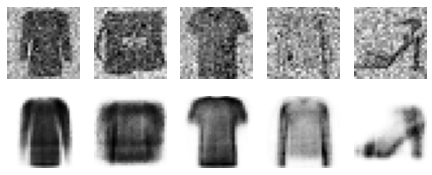

1) Gaussian noise 사용

tf.random.set_seed(42)

np.random.seed(42)

denoising_encoder = keras.models.Sequential([

keras.layers.Flatten(input_shape=[28, 28]),

keras.layers.GaussianNoise(0.2),

keras.layers.Dense(100, activation="selu"),

keras.layers.Dense(30, activation="selu")

])

denoising_decoder = keras.models.Sequential([

keras.layers.Dense(100, activation="selu", input_shape=[30]),

keras.layers.Dense(28 * 28, activation="sigmoid"),

keras.layers.Reshape([28, 28])

])

denoising_ae = keras.models.Sequential([denoising_encoder, denoising_decoder])

denoising_ae.compile(loss="binary_crossentropy", optimizer=keras.optimizers.SGD(learning_rate=1.0),

metrics=[rounded_accuracy])

history = denoising_ae.fit(X_train, X_train, epochs=10,

validation_data=(X_valid, X_valid))

# Epoch 1/10

# 1719/1719 [==============================] - 5s 3ms/step - loss: 0.3509 - rounded_accuracy: 0.8766 - val_loss: 0.3182 - val_rounded_accuracy: 0.9036

# Epoch 2/10

# 1719/1719 [==============================] - 5s 3ms/step - loss: 0.3121 - rounded_accuracy: 0.9097 - val_loss: 0.3086 - val_rounded_accuracy: 0.9156

# Epoch 3/10

# 1719/1719 [==============================] - 5s 3ms/step - loss: 0.3057 - rounded_accuracy: 0.9152 - val_loss: 0.3035 - val_rounded_accuracy: 0.9191

# Epoch 4/10

# 1719/1719 [==============================] - 5s 3ms/step - loss: 0.3021 - rounded_accuracy: 0.9183 - val_loss: 0.2999 - val_rounded_accuracy: 0.9207

# Epoch 5/10

# 1719/1719 [==============================] - 5s 3ms/step - loss: 0.2992 - rounded_accuracy: 0.9209 - val_loss: 0.2968 - val_rounded_accuracy: 0.9252

# Epoch 6/10

# 1719/1719 [==============================] - 5s 3ms/step - loss: 0.2969 - rounded_accuracy: 0.9229 - val_loss: 0.2947 - val_rounded_accuracy: 0.9272

# Epoch 7/10

# 1719/1719 [==============================] - 5s 3ms/step - loss: 0.2953 - rounded_accuracy: 0.9243 - val_loss: 0.2946 - val_rounded_accuracy: 0.9275

# Epoch 8/10

# 1719/1719 [==============================] - 5s 3ms/step - loss: 0.2940 - rounded_accuracy: 0.9254 - val_loss: 0.2931 - val_rounded_accuracy: 0.9296

# Epoch 9/10

# 1719/1719 [==============================] - 5s 3ms/step - loss: 0.2931 - rounded_accuracy: 0.9262 - val_loss: 0.2923 - val_rounded_accuracy: 0.9266

# Epoch 10/10

# 1719/1719 [==============================] - 5s 3ms/step - loss: 0.2922 - rounded_accuracy: 0.9270 - val_loss: 0.2907 - val_rounded_accuracy: 0.9299* Gaussian noise를 사용해 훈련을 진행하였다.

tf.random.set_seed(42)

np.random.seed(42)

noise = keras.layers.GaussianNoise(0.2)

show_reconstructions(denoising_ae, noise(X_valid, training=True))

plt.show()* test시에는 정규화 층인 keras.layers.GaussianNoise()가 작동하지 않기 때문에, 따로 GaussianNoise층을 통과시킨 데이터를 test 시에 모델에 집어넣었다.

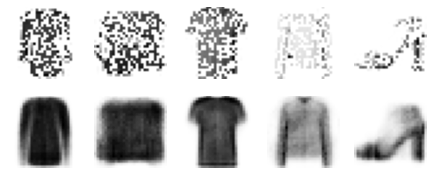

2) Dropout 사용

tf.random.set_seed(42)

np.random.seed(42)

dropout_encoder = keras.models.Sequential([

keras.layers.Flatten(input_shape=[28, 28]),

keras.layers.Dropout(0.5),

keras.layers.Dense(100, activation="selu"),

keras.layers.Dense(30, activation="selu")

])

dropout_decoder = keras.models.Sequential([

keras.layers.Dense(100, activation="selu", input_shape=[30]),

keras.layers.Dense(28 * 28, activation="sigmoid"),

keras.layers.Reshape([28, 28])

])

dropout_ae = keras.models.Sequential([dropout_encoder, dropout_decoder])

dropout_ae.compile(loss="binary_crossentropy", optimizer=keras.optimizers.SGD(learning_rate=1.0),

metrics=[rounded_accuracy])

history = dropout_ae.fit(X_train, X_train, epochs=10,

validation_data=(X_valid, X_valid))

# Epoch 1/10

# 1719/1719 [==============================] - 5s 3ms/step - loss: 0.3568 - rounded_accuracy: 0.8710 - val_loss: 0.3200 - val_rounded_accuracy: 0.9041

# Epoch 2/10

# 1719/1719 [==============================] - 5s 3ms/step - loss: 0.3182 - rounded_accuracy: 0.9032 - val_loss: 0.3125 - val_rounded_accuracy: 0.9110

# Epoch 3/10

# 1719/1719 [==============================] - 5s 3ms/step - loss: 0.3128 - rounded_accuracy: 0.9075 - val_loss: 0.3075 - val_rounded_accuracy: 0.9152

# Epoch 4/10

# 1719/1719 [==============================] - 5s 3ms/step - loss: 0.3092 - rounded_accuracy: 0.9102 - val_loss: 0.3041 - val_rounded_accuracy: 0.9178

# Epoch 5/10

# 1719/1719 [==============================] - 5s 3ms/step - loss: 0.3067 - rounded_accuracy: 0.9123 - val_loss: 0.3015 - val_rounded_accuracy: 0.9193

# Epoch 6/10

# 1719/1719 [==============================] - 5s 3ms/step - loss: 0.3048 - rounded_accuracy: 0.9139 - val_loss: 0.3014 - val_rounded_accuracy: 0.9173

# Epoch 7/10

# 1719/1719 [==============================] - 5s 3ms/step - loss: 0.3033 - rounded_accuracy: 0.9151 - val_loss: 0.2995 - val_rounded_accuracy: 0.9210

# Epoch 8/10

# 1719/1719 [==============================] - 5s 3ms/step - loss: 0.3022 - rounded_accuracy: 0.9159 - val_loss: 0.2978 - val_rounded_accuracy: 0.9229

# Epoch 9/10

# 1719/1719 [==============================] - 5s 3ms/step - loss: 0.3012 - rounded_accuracy: 0.9167 - val_loss: 0.2971 - val_rounded_accuracy: 0.9221

# Epoch 10/10

# 1719/1719 [==============================] - 5s 3ms/step - loss: 0.3003 - rounded_accuracy: 0.9175 - val_loss: 0.2958 - val_rounded_accuracy: 0.9238* Gaussian noise대신 Dropout을 사용하여 훈련을 진행하였다.

tf.random.set_seed(42)

np.random.seed(42)

dropout = keras.layers.Dropout(0.5)

show_reconstructions(dropout_ae, dropout(X_valid, training=True))* Gaussian noise를 사용한 모델에서와 같이 Dropout 층도 정규화 층이기 때문에 test시에는 따로 dropout을 적용시킨 데이터를 입력해줘야 한다.

'Deep Learning > Hands On Machine Learning' 카테고리의 다른 글

| 17.8 Variational AutoEncoder (0) | 2021.12.28 |

|---|---|

| 17.7 Sparse AutoEncoder (0) | 2021.12.28 |

| 17.5 Recurrent AutoEncoder (0) | 2021.12.26 |

| 17.4 Convolutional AutoEncoder (0) | 2021.12.26 |

| 17.3 Stacked AutoEncoder (1) | 2021.12.23 |